Private LLM - Local AI Chat1.9.9

Publisher Description

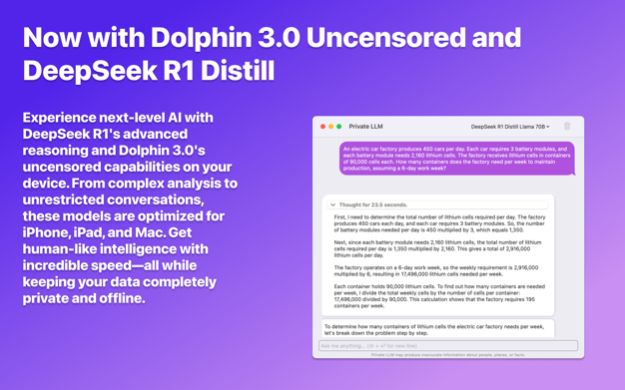

Meet Private LLM: Your Secure, Offline AI Assistant for macOS

Private LLM brings advanced AI capabilities directly to your iPhone, iPad, and Mac—all while keeping your data private and offline. With a one-time purchase and no subscriptions, you get a personal AI assistant that works entirely on your device.

Key Features:

- Local AI Functionality: Interact with a sophisticated AI chatbot without needing an internet connection. Your conversations stay on your device, ensuring complete privacy.

- Wide Range of AI Models: Choose from various open-source LLM models like Llama 3.2, Llama 3.1, Google Gemma 2, Microsoft Phi-3, Mistral 7B, and StableLM 3B. Each model is optimized for iOS and macOS hardware using advanced OmniQuant quantization, which offers superior performance compared to traditional RTN quantization methods.

- Siri and Shortcuts Integration: Create AI-driven workflows without writing code. Use Siri commands and Apple Shortcuts to enhance productivity in tasks like text parsing and generation.

- No Subscriptions or Logins: Enjoy full access with a single purchase. No need for subscriptions, accounts, or API keys. Plus, with Family Sharing, up to six family members can use the app.

- AI Language Services on macOS: Utilize AI-powered tools for grammar correction, summarization, and more across various macOS applications in multiple languages.

- Superior Performance with OmniQuant: Benefit from the advanced OmniQuant quantization process, which preserves the model's weight distribution for faster and more accurate responses, outperforming apps that use standard quantization techniques.

Supported Model Families:

- DeepSeek R1 Distill based models

- Phi-4 14B model

- Llama 3.3 70B based models

- Llama 3.2 based models

- Llama 3.1 based models

- Llama 3.0 based models

- Google Gemma 2 based models

- Qwen 2.5 based models (0.5B to 32B)

- Qwen 2.5 Coder based models (0.5B to 32B)

- Google Gemma 3 1B based models

- Solar 10.7B based models

- Yi 34B based models

For a full list of supported models, including detailed specifications, please visit privatellm.app/models.

Private LLM is a better alternative to generic llama.cpp and MLX wrappers apps like Enchanted, Ollama, LLM Farm, LM Studio, RecurseChat, etc on three fronts:

1. Private LLM uses a significantly faster mlc-llm based inference engine.

2. All models in Private LLM are quantised using the state of the art OmniQuant quantization algorithm, while competing apps use naive round-to-nearest quantization.

3. Private LLM is a fully native app built using C++, Metal and Swift, while many of the competing apps are bloated and non-native Electron JS based apps.

Please note that Private LLM only supports inference with text based LLMs.

Private LLM has been specifically optimized for Apple Silicon Macs.Private LLM for macOS delivers the best performance on Macs equipped with the Apple M1 or newer chips. Users on older Intel Macs without eGPUs may experience reduced performance. Please note that although the app nominally works on Intel Macs, we've stopped adding support for new models on Intel Macs due to performance issues associated with Intel hardware.Apr 22, 2025

Version 1.9.9

- Added support for 3-bit and 4-bit OmniQuant quantized versions of the Perplexity r1-1776-distill-llama-70b model

- Added support for a 4-bit OmniQuant quantized version of the Llama-3.1-8B-UltraMedical model

- Added support for a 4-bit OmniQuant quantized version of the Meta-Llama-3.1-8B-SurviveV3 survival specialist model

- Added support for a 4-bit GPTQ quantized versions of the openhands 7B and 32B coding models

- Added support for 4-bit QAT version of the Google Gemma3 1B IT model

- Added support for 4-bit OmniQuant quantized versions of the Google Gemma3 1B based gemma-3-1b-it-abliterated and amoral-gemma3-1B-v2 models

- Other minor bug fixes and updates

About Private LLM - Local AI Chat

The company that develops Private LLM - Local AI Chat is Numen Technologies Limited. The latest version released by its developer is 1.9.9.

To install Private LLM - Local AI Chat on your iOS device, just click the green Continue To App button above to start the installation process. The app is listed on our website since 2025-04-22 and was downloaded 51 times. We have already checked if the download link is safe, however for your own protection we recommend that you scan the downloaded app with your antivirus. Your antivirus may detect the Private LLM - Local AI Chat as malware if the download link is broken.

How to install Private LLM - Local AI Chat on your iOS device:

- Click on the Continue To App button on our website. This will redirect you to the App Store.

- Once the Private LLM - Local AI Chat is shown in the iTunes listing of your iOS device, you can start its download and installation. Tap on the GET button to the right of the app to start downloading it.

- If you are not logged-in the iOS appstore app, you'll be prompted for your your Apple ID and/or password.

- After Private LLM - Local AI Chat is downloaded, you'll see an INSTALL button to the right. Tap on it to start the actual installation of the iOS app.

- Once installation is finished you can tap on the OPEN button to start it. Its icon will also be added to your device home screen.

Program Details

System requirements

Download information

Pricing

Version History

version 1.9.9

posted on 2025-04-22

Apr 22, 2025

Version 1.9.9

- Added support for 3-bit and 4-bit OmniQuant quantized versions of the Perplexity r1-1776-distill-llama-70b model

- Added support for a 4-bit OmniQuant quantized version of the Llama-3.1-8B-UltraMedical model

- Added support for a 4-bit OmniQuant quantized version of the Meta-Llama-3.1-8B-SurviveV3 survival specialist model

- Added support for a 4-bit GPTQ quantized versions of the openhands 7B and 32B coding models

- Added support for 4-bit QAT version of the Google Gemma3 1B IT model

- Added support for 4-bit OmniQuant quantized versions of the Google Gemma3 1B based gemma-3-1b-it-abliterated and amoral-gemma3-1B-v2 models

- Other minor bug fixes and updates

version 1.9.8

posted on 2025-02-16

Feb 16, 2025

Version 1.9.8

- Added support for 3-bit OmniQuant quantized versions of Llama 3.3 70B-based models (5 new models)

- Added support for 3-bit and 4-bit OmniQuant quantized versions of the EVA LLaMA 3.33 70B v0.1 model

- Added support for 8 new models from the Dolphin 3.0 family of models

- Added support for the unquantized version of the Llama 3.2 1B Instruct Abliterated model

- Added support for the 4-bit OmniQuant quantized Gemma 2 Ifable 9B creative writing model

- Context length is now displayed in the model quick switcher

- Fixed a crash with some newer models on older versions of macOS (Sonoma)

- Other minor bug fixes and updates

version 1.9.7

posted on 2025-01-27

Jan 27, 2025

Version 1.9.7

* Support for downloading 7 new DeepSeek R1 Distill based models on Apple Silicon Macs. Support for individual models varies by device capabilities.

* Users with Apple Silicon Macs with 16GB RAM can now download the phi-4 model (previously restricted to Apple Silicon Macs with 24 GB of RAM)

* Minor bugfixes and updates.

version 1.9.5

posted on 2024-12-20

Dec 20, 2024

Version 1.9.5

- Support for downloading 16 new models (varies by device capacity).

- Three new Llama 3.3 based uncensored models: EVA-LLaMA-3.33-70B-v0.0, Llama-3.3-70B-Instruct-abliterated and L3.3-70B-Euryale-v2.3.

- Hermes-3-Llama-3.2-3B and Hermes-3-Llama-3.1-8B models.

- FuseChat-Llama-3.2-1B-Instruct, FuseChat-Llama-3.2-3B-Instruct, FuseChat-Llama-3.1-8B-Instruct, FuseChat-Qwen-2.5-7B-Instruct and FuseChat-Gemma-2-9B-Instruct models.

- FuseChat-Llama-3.2-1B-Instruct also comes with an unquantized variant.

- EVA-D-Qwen2.5-1.5B-v0.0, EVA-Qwen2.5-7B-v0.1, EVA-Qwen2.5-14B-v0.2 and EVA-Qwen2.5-32B-v0.2 models.

- Llama-3.1-8B-Lexi-Uncensored-V2 model

- Improved LaTeX rendering

- Stability improvements and bug fixes.

Thank you for choosing Private LLM. We are committed to continue improving the app and to making it more useful for you. For support requests and feature suggestions, please feel free to email us at support@numen.ie, or tweet us @private_llm. If you enjoy the app, leaving an App Store is a great way to support us.

version 1.9.4

posted on 2024-12-09

Dec 9, 2024

Version 1.9.4

- Support for downloading the latest Meta Llama 3.3 70B model (on Apple Silicon Macs with 48GB or more RAM).

Thank you for choosing Private LLM. We are committed to continue improving the app and to making it more useful for you. For support requests and feature suggestions, please feel free to email us at support@numen.ie, or tweet us @private_llm. If you enjoy the app, leaving an App Store is a great way to support us.

version 1.9.2

posted on 2024-10-16

Oct 16, 2024

Version 1.9.2

- Bugfix release: fix for crash while loading some of the older models that use the sentencepiece tokenizer.

- Drop support for Llama 3.2 1B and 3B models on Intel Macs due to stability issues.

Thank you for choosing Private LLM. We are committed to continue improving the app and to making it more useful for you. For support requests and feature suggestions, please feel free to email us at support@numen.ie, or tweet us @private_llm. If you enjoy the app, leaving an App Store is a great way to support us.

version 1.9.0

posted on 2024-09-17

Sep 17, 2024

Version 1.9.0

- Support for 2 new models from the Gemma 2 family of models (on Apple Silicon Macs).

- 4-bit OmniQuant quantized version of the gemma-2-2b-it model.

- 4-bit OmniQuant quantized version of the multilingual SauerkrautLM-gemma-2-2b-it model.

- Stability improvements and bug fixes.

Thank you for choosing Private LLM. We are committed to continue improving the app and to making it more useful for you. For support requests and feature suggestions, please feel free to email us at support@numen.ie, or tweet us @private_llm. If you enjoy the app, leaving an App Store is a great way to support us.

version 1.8.9

posted on 2024-07-28

Jul 28, 2024

Version 1.8.9

- Support for downloading 5 new models. Three models from the new Meta Llama 3.1 family of models and two Meta Llama 3 based models (Support varies by device capabilities).

- 4-bit OmniQuant quantized version of the Meta Llama 3.1 8B Instruct model.

- 4-bit OmniQuant quantized version of the Meta Llama 3.1 8B Instruct abliterated model.

- 4-bit OmniQuant quantized version of the Meta Llama 3.1 70B Instruct model.

- 4-bit OmniQuant quantized version of the Llama 3 based L3 Umbral Mind RP v3.0 model.

- 4-bit OmniQuant quantized version of the Llama 3 based Llama 3 Instruct 8B SPPO Iter3 model.

- Stability improvements and bug fixes.

Thank you for choosing Private LLM. We are committed to continue improving the app and to making it more useful for you. For support requests and feature suggestions, please feel free to email us at support@numen.ie, or tweet us @private_llm. If you enjoy the app, leaving an App Store is a great way to support us.

version 1.8.8

posted on 2024-06-19

Jun 19, 2024

Version 1.8.8

- Support for downloading 12 new models (support varies by device capabilities).

- 4-bit OmniQuant quantized version of Mistral 7B Instruct v0.3

- 4-bit OmniQuant quantized version of Meta-Llama-3-8B-Instruct-abliterated-v3

- 4-bit OmniQuant quantized version of Llama-3-8B-Instruct-MopeyMule

- 4-bit OmniQuant quantized version of openchat-3.6-8b-20240522

- 4-bit OmniQuant quantized version of Llama-3-WhiteRabbitNeo-8B-v2.0

- 4-bit OmniQuant quantized version of Hermes-2-Theta-Llama-3-8B

- 4-bit OmniQuant quantized version of LLaMA3-iterative-DPO-final

- 4-bit OmniQuant quantized version of Hathor_Stable-v0.2-L3-8B

- 4-bit OmniQuant quantized version of NeuralDaredevil-8B-abliterated

- 3 and 4-bit OmniQuant quantized versions of Smaug-Llama-3-70B-Instruct

- 3 and 4-bit OmniQuant quantized versions of Smaug-Llama-3-70B-Instruct-abliterated-v3

- 3 and 4-bit OmniQuant quantized versions of Cat-Llama-3-70B-instruct

- Minor UI improvements

- Stability improvements and bug fixes.

Thank you for choosing Private LLM. We are committed to continue improving the app and to making it more useful for you. For support requests and feature suggestions, please feel free to email us at support@numen.ie, or tweet us @private_llm. If you enjoy the app, leaving an App Store is a great way to support us.

version 1.8.7

posted on 2024-05-13

May 13, 2024

Version 1.8.7

- Support for downloading a 3-bit OmniQuant quantized version of the Meta-Llama-3-70B-Instruct model on Apple Silicon Macs with 48GB or more RAM.

- Stability improvements and bug fixes.

If you have any feedback or questions, we'd love to hear from you! Numen Technologies offers free tech support; you can email: support@numen.ie, message us on our Discord, or Tweet at us @private_llm. If you find Private LLM to be useful, we'd appreciate a review on the App Store. Your review will help other people find Private LLM.